Caffe is certainly one of the best frameworks for deep learning, if not the best.

Let’s try to put things into order, in order to get a good tutorial :).

Caffe

Install

First install Caffe following my tutorials on Ubuntu or Mac OS with Python layers activated and pycaffe path correctly set export PYTHONPATH=~/technologies/caffe/python/:$PYTHONPATH.

Launch the python shell

In the iPython shell in your Caffe repository, load the different libraries :

Set the computation mode CPU

or GPU

Define a network model

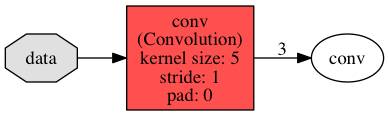

Let’s create first a very simple model with a single convolution composed of 3 convolutional neurons, with kernel of size 5x5 and stride of 1 :

This net will produce 3 output maps from an input map.

The output map for a convolution given receptive field size has a dimension given by the following equation :

Create a first file conv.prototxt describing the neuron network :

with one layer, a convolution, from the Catalog of available layers

Load the net

The names of input layers of the net are given by print net.inputs.

The net contains two ordered dictionaries

net.blobsfor input data and its propagation in the layers :net.blobs['data']contains input data, an array of shape (1, 1, 100, 100)net.blobs['conv']contains computed data in layer ‘conv’ (1, 3, 96, 96)initialiazed with zeros.To print the infos,

net.paramsa vector of blobs for weight and bias parametersnet.params['conv'][0]contains the weight parameters, an array of shape (3, 1, 5, 5)net.params['conv'][1]contains the bias parameters, an array of shape (3,)initialiazed with ‘weight_filler’ and ‘bias_filler’ algorithms.To print the infos :

Blobs are memory abstraction objects (with execution depending on the mode), and data is contained in the field data as an array :

To draw the network, a simle python command :

This will print the following image :

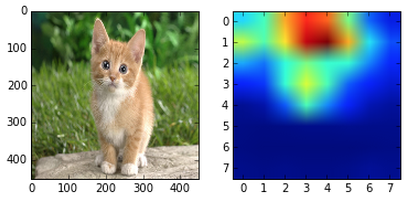

Compute the net output on an image as input

Let’s load a gray image of size 1x360x480 (channel x height x width) into the previous net :

We need to reshape the data blob (1, 1, 100, 100) to the new size (1, 1, 360, 480) to fit the image :

Let’s compute the blobs given this input

Now net.blobs['conv'] is filled with data, and the 3 pictures inside each of the 3 neurons (net.blobs['conv'].data[0,i]) can be plotted easily.

To save the net parameters net.params, just call :

Load pretrained parameters to classify an image

In the previous net, weight and bias params have been initialiazed randomly.

It is possible to load trained parameters and in this case, the result of the net will produce a classification.

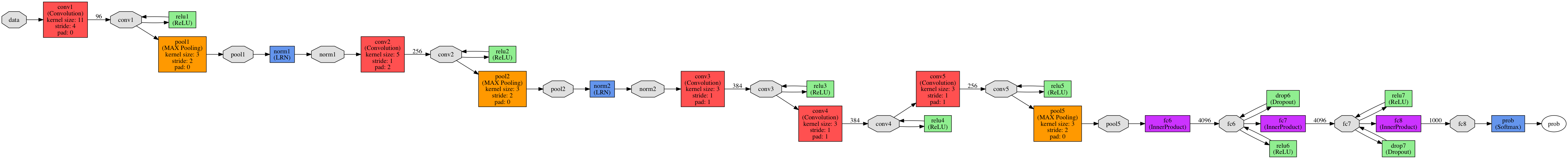

Many trained models can be downloaded from the community in the Caffe Model Zoo, such as car classification, flower classification, digit classification…

Model informations are written in Github Gist format. The parameters are saved in a .caffemodel file specified in the gist. To download the model :

where is the gist directory (by default the gist is saved in the *models* directory).

Let’s download the CaffeNet model and the labels corresponding to the classes :

This model has been trained on processed images, so you need to preprocess the image with a preprocessor, before saving it in the blob.

That is, in the python shell :

It will print you the top classes detected for the images.

Learn : solve the params on training data

It is now time to create your own model, and training the parameters on training data.

To train a network, you need

- its model definition, as seen previously

- a second protobuf file, the solver file, describing the parameters for the stochastic gradient.

For example, the CaffeNet solver :

Usually, you define a train net, for training, with training data, and a test set, for validation. Either you can define the train and test nets in the prototxt solver file

or you can also specify only one prototxt file, adding an include phase statement for the layers that have to be different in training and testing phases, such as input data :

Data can also be set directly in Python.

Load the solver in python

By default it is the SGD solver. It’s possible to specify another solver_type in the prototxt solver file (ADAGRAD or NESTEROV). It’s also possible to load directly

but be careful since SGDSolver will use SGDSolver whatever is configured in the prototxt file… so it is less reliable.

Now, it’s time to begin to see if everything works well and to fill the layers in a forward propagation in the net (computation of net.blobs[k].data from input layer until the loss layer) :

For the computation of the gradients (computation of the net.blobs[k].diff and net.params[k][j].diff from the loss layer until input layer) :

To launch one step of the gradient descent, that is a forward propagation, a backward propagation and the update of the net params given the gradients (update of the net.params[k][j].data) :

To run the full gradient descent, that is the max_iter steps :

Input data, train and test set

In order to learn a model, you usually set a training set and a test set.

The different input layer can be :

- ‘Data’ : for data saved in a LMDB database, such as before

- ‘ImageData’ : for data in a txt file listing all the files

- "HDF5Data" for data saved in HDF5 files

Compute accuracy of the model on the test data

Once solved,

The higher the accuracy, the better !

Parameter sharing

Parameter sharing between Siamese networks

Recurrent neural nets in Caffe

Have a look at my tutorial about recurrent neural nets in Caffe.

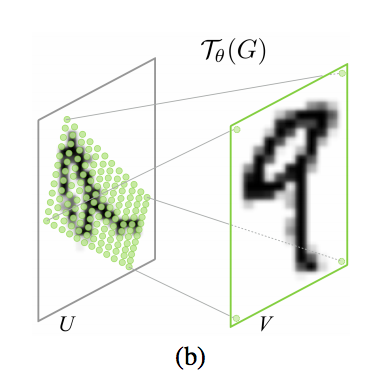

Spatial transformer layers

my tutorial about improving classification with spatial transformer layers

Caffe in Python

Define a model in Python

It is also possible to define the net model directly in Python, and save it to a prototxt files. Here are the commands :

will produce the prototxt file :

Create your custom python layer

Let’s create a layer to add a value.

Add a custom python layer to your conv.prototxt file :

and create a mypythonlayer.py file that has to to be in the current directory or in the PYTHONPATH :

This layer will simply add a value

Caffe in C++

The blob

The blob (blob.hpp and blob.cpp) is a wrapper to manage memory independently of CPU/GPU choice, using SyncedMemory class, and has a few functions like Arrays in Python, both for the data and the computed gradient (diff) arrays contained in the blob.

To initiate a blob :

Methods on the blob :

shape()andshape_string()to get the shape, orshape(i)to get the size of the i-th dimension, orshapeEquals()to compare shape equalityReshape(const vector<int>& shape)orreshapeLike(const Blob& other)another blobcount()the number of elements (shape(0)*shape(1)*...)offset()to get the c++ index in the arrayCopyFrom()to copy the blobdata_at()anddiff_at()asum_data()andasum_diff()their L1 normsumsq_data()andsumsq_diff()their L1 normscale_data()andscale_diff()to multiply the data by a factorUpdate()to updatedataarray givendiffarray

To access the data in the blob, from the CPU code :

and from the GPU code :

Data transfer between GPU and CPU will be dealt automatically.

Caffe provides abstraction methods to deal with data :

caffe_set()andcaffe_gpu_set()to initialize the data with a valuecaffe_add_scalar()andcaffe_gpu_add_scalar()to add a scalar to datacaffe_axpy()andcaffe_gpu_axpy()for y←ax+yy←ax+ycaffe_scal()andcaffe_gpu_scal()for x←axx←axcaffe_cpu_sign()andcaffe_gpu_sign()for y←sign(x)y←sign(x)caffe_cpu_axpby()andcaffe_cpu_axpbyfor y←a×x+b×yy←a×x+b×ycaffe_copy()to deep copycaffe_cpu_gemm()andcaffe_gpu_gemm()for matrix multiplication C←αA×B+βCC←αA×B+βCcaffe_gpu_atomic_add()when you need to update a value in an atomic way (such as requests in ACID databases but for gpu threads in this case)

… and so on.

The layer

A layer, such as the SoftmaxWithLoss layer, will need a few functions working with arguments top blobs and bottom blobs :

Forward_cpuorForward_gpuBackward_cpuorBackward_gpuReshape- optionaly :

LayerSetUp, to set non-standard fields

The layer_factory is a set of helper functions to get the right layer implementation according to the engine (Caffe or CUDNN).

引用链接

Deep learning tutorial on Caffe technology : basic commands, Python and C++ code.