RAID软件磁盘阵列

RAID 即廉价磁盘冗余阵列,其高可用性和可靠性适用于大规模环境中,相比正常使用,数据更需要被保护。RAID 是将多个磁盘整合的大磁盘,不仅具有存储功能,同时还有数据保护功能.

软件磁盘整列通过mdadm命令创建.

RAID等级

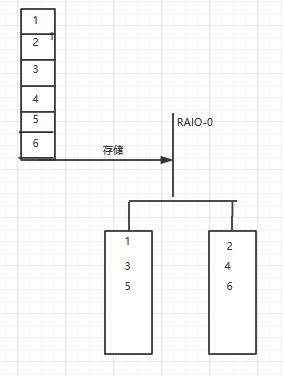

- RAID-0: 等量模式,stripe,性能较佳,磁盘利用率100%

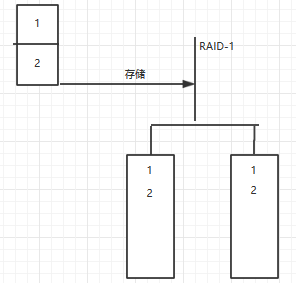

- RAID-1: 镜像模式,mirror,安全性较佳,磁盘利用率50%

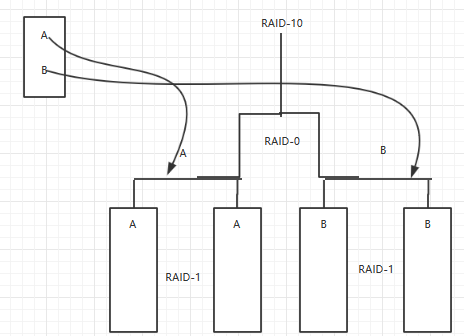

- RAID-0+1: 先组成RAID-0,再有RAID-0组成RAID-1 性能安全兼顾,磁盘利用率50%

- RAID-1+0:先组成RAID-1,再有RAID-1组成RAID-0 性能安全兼顾,磁盘利用率50%

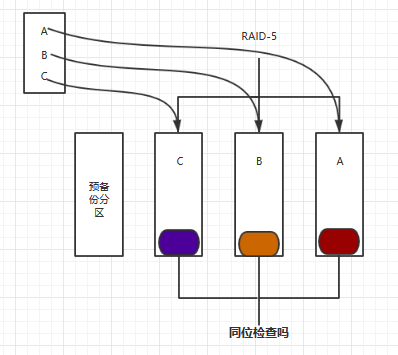

- RAID-5: 等量模式与分布式奇偶校验,性能与安全份均衡,磁盘可用量等于n-1

- RAID-6: 等量模式与双分布式奇偶校验,性能与安全均衡,磁盘可用量等于n-2

WD MyCloud使用的磁盘阵列

WD MyCloud使用RAID-1镜像模式,因此磁盘使用率只有实际容量的一半。所以,当拷贝内容出来或者存储数据的时候,4T的硬盘,只能存储2T的实际数据。

RAID-0测试

RAID-0是等量模式,最好由两块相同型号,相同容量的磁盘来组成.这种模式的RAID会将磁盘想切除等量的区块(chunk),当有存储文件需求时,就会先把文件切割成一定数目的区块,然后依次存入各个磁盘上.如图示

下面是使用mdadm工具创建RAID-0过程

- 创建两个1G的分区/dev/vdb10,/dev/vdb11

- 使用mdadm工具创建raid0磁盘阵列,命名为/dev/md0

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 |

###新建分区/dev/vdb10,/dev/vdb11略过 [root@zwj ~]# mdadm --create /dev/md0 --level=0 --raid-devices=2 /dev/vdb10 /dev/vdb11 #同mdadm -C /dev/md0 -l raid0 -n 2 /dev/vdb10 /dev/vdb11 ,-C 接阵列名,-l接等级,-n 接使用磁盘数 mdadm: Defaulting to version 1.2 metadata mdadm: array /dev/md0 started. [root@zwj ~]# mdadm -E /dev/vdb10 /dev/vdb11 #查看阵列中的磁盘信息 /dev/vdb10: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : d26ff510:82cc5acf:b823eaae:c98c2e8f Name : zwj:0 (local to host zwj) Creation Time : Sun May 7 18:30:05 2017 Raid Level : raid0 Raid Devices : 2 Avail Dev Size : 2096545 (1023.70 MiB 1073.43 MB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1960 sectors, after=0 sectors State : clean Device UUID : c645eb97:9dc15ee8:9148e372:0c26b998 Update Time : Sun May 7 18:30:05 2017 Bad Block Log : 512 entries available at offset 72 sectors Checksum : 402c224f - correct Events : 0 Chunk Size : 512K Device Role : Active device 0 Array State : AA ('A' == active, '.' == missing, 'R' == replacing) /dev/vdb11: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : d26ff510:82cc5acf:b823eaae:c98c2e8f Name : zwj:0 (local to host zwj) Creation Time : Sun May 7 18:30:05 2017 Raid Level : raid0 Raid Devices : 2 Avail Dev Size : 2096545 (1023.70 MiB 1073.43 MB) Data Offset : 2048 sectors Super Offset : 8 sectors Unused Space : before=1960 sectors, after=0 sectors State : clean Device UUID : 54fd15e5:8ed74a79:0b1efd81:7995a016 Update Time : Sun May 7 18:30:05 2017 Bad Block Log : 512 entries available at offset 72 sectors Checksum : ab4434b5 - correct Events : 0 Chunk Size : 512K Device Role : Active device 1 Array State : AA ('A' == active, '.' == missing, 'R' == replacing) [root@zwj ~]# mdadm --detail /dev/md0 /dev/md0: Version : 1.2 Creation Time : Sun May 7 18:30:05 2017 Raid Level : raid0 Array Size : 2096128 (2047.00 MiB 2146.44 MB) Raid Devices : 2 Total Devices : 2 Persistence : Superblock is persistent Update Time : Sun May 7 18:30:05 2017 State : clean Active Devices : 2 Working Devices : 2 Failed Devices : 0 Spare Devices : 0 Chunk Size : 512K Name : zwj:0 (local to host zwj) UUID : d26ff510:82cc5acf:b823eaae:c98c2e8f Events : 0 Number Major Minor RaidDevice State 252 26 0 active sync /dev/vdb10 252 27 1 active sync /dev/vdb11 [root@zwj ~]# cat /proc/mdstat Personalities : [raid0] md0 : active raid0 vdb11[1] vdb10[0] blocks super 1.2 512k chunks unused devices: <none> [root@zwj ~]# mkdir -p /mnt/raid0 #创建挂载目录 [root@zwj ~]# mkfs.ext3 /dev/md >/dev/null [root@zwj ~]# mount /dev/md0 /mnt/raid0 [root@zwj ~]# df ...... /dev/md0 2.0G 236M 1.7G 13% /mnt/raid |

RAID-1测试

RAID-1是镜像模式,最好由两块相同型号,相同容量的磁盘来组成.这种模式的RAID有点主要是数据的备份,但磁盘使用率低.如图示

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 |

[root@zwj raid1]# fdisk -l |grep "/dev/vdb1[2-9]" #新建分区为200M /dev/vdb12 56186 56592 205096+ 83 Linux /dev/vdb13 56593 56999 205096+ 83 Linux [root@zwj raid0]# mdadm -C /dev/md1 -l 1 -n 2 /dev/vdb{12,13} mdadm: Note: this array has metadata at the start and may not be suitable as a boot device. If you plan to store '/boot' on this device please ensure that your boot-loader understands md/v1.x metadata, or use --metadata=0.90 Continue creating array? y mdadm: Defaulting to version 1.2 metadata mdadm: array /dev/md1 started. [root@zwj raid0]# mdadm -E /dev/vdb12 /dev/vdb13 /dev/vdb12: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 0855d3d3:502aa3e1:9a09f0e8:abdb1e1a Name : zwj:1 (local to host zwj) Creation Time : Sun May 7 19:49:56 2017 Raid Level : raid1 Raid Devices : 2 Avail Dev Size : 409905 (200.15 MiB 209.87 MB) Array Size : 204928 (200.13 MiB 209.85 MB) Used Dev Size : 409856 (200.13 MiB 209.85 MB) Data Offset : 288 sectors Super Offset : 8 sectors Unused Space : before=200 sectors, after=49 sectors State : clean Device UUID : e4909dcf:f2fb8812:0c5a70cb:021dd248 Update Time : Sun May 7 19:49:58 2017 Bad Block Log : 512 entries available at offset 72 sectors Checksum : 4478c6ef - correct Events : 17 Device Role : Active device 0 Array State : AA ('A' == active, '.' == missing, 'R' == replacing) /dev/vdb13: Magic : a92b4efc Version : 1.2 Feature Map : 0x0 Array UUID : 0855d3d3:502aa3e1:9a09f0e8:abdb1e1a Name : zwj:1 (local to host zwj) Creation Time : Sun May 7 19:49:56 2017 Raid Level : raid1 Raid Devices : 2 Avail Dev Size : 409905 (200.15 MiB 209.87 MB) Array Size : 204928 (200.13 MiB 209.85 MB) Used Dev Size : 409856 (200.13 MiB 209.85 MB) Data Offset : 288 sectors Super Offset : 8 sectors Unused Space : before=200 sectors, after=49 sectors State : clean Device UUID : 170d0094:52cdc3d9:993a58b1:b2f3c341 Update Time : Sun May 7 19:49:58 2017 Bad Block Log : 512 entries available at offset 72 sectors Checksum : aeefcbc0 - correct Events : 17 Device Role : Active device 1 Array State : AA ('A' == active, '.' == missing, 'R' == replacing) [root@zwj ~]# mdadm --detail /dev/md1 /dev/md1: Version : 1.2 Creation Time : Sun May 7 19:49:56 2017 Raid Level : raid1 Array Size : 204928 (200.13 MiB 209.85 MB) Used Dev Size : 204928 (200.13 MiB 209.85 MB) Raid Devices : 2 Total Devices : 2 Persistence : Superblock is persistent Update Time : Sun May 7 19:49:58 2017 State : clean Active Devices : 2 Working Devices : 2 Failed Devices : 0 Spare Devices : 0 Name : zwj:1 (local to host zwj) UUID : 0855d3d3:502aa3e1:9a09f0e8:abdb1e1a Events : 17 Number Major Minor RaidDevice State 252 28 0 active sync /dev/vdb12 252 29 1 active sync /dev/vdb13 [root@zwj ~]# mkfs.ext3 /dev/md1 >/dev/null [root@zwj ~]# mkdir -p /mnt/raid1 [root@zwj ~]# mount /dev/md1 /mnt/raid1 [root@zwj ~]# cd /mnt/raid1/ [root@zwj raid1]# df -h Filesystem Size Used Avail Use% Mounted on /dev/vda1 20G 13G 5.9G 69% / /dev/vdb1 20G 936M 18G 5% /mydata /dev/md127 2.0G 236M 1.7G 13% /mnt/raid0 /dev/md1 194M 5.6M 179M 4% /mnt/raid1 #只有200M #删除/dev/vdb13 [root@zwj ~]# mdadm --detail /dev/md126 /dev/md126: Version : 1.2 Creation Time : Sun May 7 19:49:56 2017 Raid Level : raid1 Array Size : 204928 (200.13 MiB 209.85 MB) Used Dev Size : 204928 (200.13 MiB 209.85 MB) Raid Devices : 2 Total Devices : 1 Persistence : Superblock is persistent Update Time : Sun May 7 20:14:10 2017 State : clean, degraded Active Devices : 1 Working Devices : 1 Failed Devices : 0 Spare Devices : 0 Name : zwj:1 (local to host zwj) UUID : 0855d3d3:502aa3e1:9a09f0e8:abdb1e1a Events : 23 Number Major Minor RaidDevice State 252 28 0 active sync /dev/vdb12 0 0 2 removed [root@zwj ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/vda1 20G 13G 5.9G 69% / /dev/vdb1 20G 936M 18G 5% /mydata /dev/md126 194M 5.6M 179M 4% /mnt/raid1 |

RAID-10测试

RAID 10 是组合 RAID 1 和 RAID 0 形成的。要设置 RAID 10,我们至少需要4个磁盘,提供更好的性能,在 RAID 10 中我们将失去一半的磁盘容量,读与写的性能都很好,因为它会同时进行写入和读取,它能解决数据库的高 I/O 磁盘写操作,也保证了数据的安全.

我们使用四个分区测试/dev/vdb{12..15} 大小各为200M

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 |

[root@zwj ~]# mdadm -C /dev/md10 -l 10 -n 4 /dev/vdb{12..15} mdadm: /dev/vdb13 appears to be part of a raid array: level=raid1 devices=2 ctime=Sun May 7 19:49:56 2017 Continue creating array? y mdadm: Defaulting to version 1.2 metadata mdadm: array /dev/md10 started. [root@zwj ~]# mdadm -D /dev/md10 /dev/md10: Version : 1.2 Creation Time : Sun May 7 20:31:43 2017 Raid Level : raid10 Array Size : 407552 (398.00 MiB 417.33 MB) Used Dev Size : 203776 (199.00 MiB 208.67 MB) Raid Devices : 4 Total Devices : 4 Persistence : Superblock is persistent Update Time : Sun May 7 20:31:48 2017 State : clean Active Devices : 4 Working Devices : 4 Failed Devices : 0 Spare Devices : 0 Layout : near=2 Chunk Size : 512K Name : zwj:10 (local to host zwj) UUID : 3d1b1b2e:daa4597f:bf838bd1:c6b82c78 Events : 17 Number Major Minor RaidDevice State 252 28 0 active sync set-A /dev/vdb12 252 29 1 active sync set-B /dev/vdb13 252 30 2 active sync set-A /dev/vdb14 252 31 3 active sync set-B /dev/vdb15 [root@zwj ~]# mkfs.ext3 /dev/md10 >/dev/null [root@zwj ~]# mkdir /mnt/raid10;mount /dev/md10 /mnt/raid10 [root@zwj ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/vda1 20G 13G 5.9G 69% / /dev/vdb1 20G 936M 18G 5% /mydata /dev/md10 386M 11M 356M 3% /mnt/raid10 |

除了上面的方法,还有一种创建RAID-10的方法,先使用4个分区创建两个RAID-1,/dev/md1和/dev/md2

再使用/dev/md1和/dev/md2创建一个RAID-0,/dev/md10

mdadm --create /dev/md1 --level=1 --raid-devices=2 /dev/vdb12 /dev/vdb13mdadm --create /dev/md2 --level=1 --raid-devices=2 /dev/vdb14 /dev/vdb15mdadm --create /dev/md10 --level=0 --raid-devices=2 /dev/md1 /dev/md2

RAID-5测试

奇偶校验信息存储在每个磁盘中,比如说,我们有4个磁盘(预备磁盘不计入内),其中相当于一个磁盘大小的空间被分割去存储所有磁盘的奇偶校验信息。如果任何一个磁盘出现故障,我们可以通过更换故障磁盘后,从奇偶校验信息重建得到原来的数据。

特点:

- 提供更好的性能。

- 支持冗余和容错。

- 支持热备份。

- 将用掉一个磁盘的容量存储奇偶校验信息。

- 单个磁盘发生故障后不会丢失数据。我们可以更换故障硬盘后从奇偶校验信息中重建数据。

- 适合于面向事务处理的环境,读操作会更快。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 |

[root@zwj ~]# mdadm --create /dev/md5 -l 5 -n 3 --spare-devices=1 /dev/vdb{12,13,14,15} mdadm: /dev/vdb12 appears to be part of a raid array: level=raid10 devices=4 ctime=Sun May 7 20:31:43 2017 mdadm: /dev/vdb13 appears to be part of a raid array: level=raid10 devices=4 ctime=Sun May 7 20:31:43 2017 mdadm: /dev/vdb14 appears to be part of a raid array: level=raid10 devices=4 ctime=Sun May 7 20:31:43 2017 mdadm: /dev/vdb15 appears to be part of a raid array: level=raid10 devices=4 ctime=Sun May 7 20:31:43 2017 Continue creating array? y mdadm: Defaulting to version 1.2 metadata mdadm: array /dev/md5 started. [root@zwj ~]# mdadm -D /dev/md5 /dev/md5: Version : 1.2 Creation Time : Sun May 7 21:26:28 2017 Raid Level : raid5 Array Size : 407552 (398.00 MiB 417.33 MB) Used Dev Size : 203776 (199.00 MiB 208.67 MB) Raid Devices : 3 Total Devices : 4 Persistence : Superblock is persistent Update Time : Sun May 7 21:26:34 2017 State : clean Active Devices : 3 Working Devices : 4 Failed Devices : 0 Spare Devices : 1 Layout : left-symmetric Chunk Size : 512K Name : zwj:5 (local to host zwj) UUID : 27119caa:5e2deb6e:427c4d7f:536669ca Events : 18 Number Major Minor RaidDevice State 252 28 0 active sync /dev/vdb12 252 29 1 active sync /dev/vdb13 252 30 2 active sync /dev/vdb14 252 31 - spare /dev/vdb15 [root@zwj ~]# cat /proc/mdstat Personalities : [raid0] [raid10] [raid6] [raid5] [raid4] md5 : active raid5 vdb14[4] vdb15[3](S) vdb13[1] vdb12[0] blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU] unused devices: <none> [root@zwj ~]# mkfs.ext3 /dev/md5 >/dev/null [root@zwj ~]# mkdir -p /mnt/raid5 [root@zwj ~]# mount /dev/md5 /mnt/raid5 [root@zwj ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/vda1 20G 13G 5.9G 69% / /dev/vdb1 20G 936M 18G 5% /mydata /dev/md5 386M 11M 356M 3% /mnt/raid5 [root@zwj ~]# cd /mnt/raid5 [root@zwj raid5]# dd if=/dev/zero of=50_M_file bs=1M count=50 50+0 records in 50+0 records out bytes (52 MB) copied, 0.1711 s, 306 MB/s [root@zwj raid5]# df -h Filesystem Size Used Avail Use% Mounted on /dev/vda1 20G 13G 5.9G 69% / /dev/vdb1 20G 936M 18G 5% /mydata /dev/md5 386M 61M 306M 17% /mnt/raid5 [root@zwj raid5]# echo "assume /dev/vdb13 fail---";mdadm --manage /dev/md5 --fail /dev/vdb13;mdadm -D /dev/md5 #模拟/dev/vdb13损坏 assume /dev/vdb13 fail--- mdadm: set /dev/vdb13 faulty in /dev/md5 /dev/md5: Version : 1.2 Creation Time : Sun May 7 21:26:28 2017 Raid Level : raid5 Array Size : 407552 (398.00 MiB 417.33 MB) Used Dev Size : 203776 (199.00 MiB 208.67 MB) Raid Devices : 3 Total Devices : 4 Persistence : Superblock is persistent Update Time : Sun May 7 21:37:40 2017 State : clean, degraded, recovering Active Devices : 2 #活跃的2个磁盘,因为坏了1个 Working Devices : 3 #处于工作状态的有3个,1个spare Failed Devices : 1 #坏了1个 Spare Devices : 1 #1个spare Layout : left-symmetric Chunk Size : 512K Rebuild Status : 0% complete Name : zwj:5 (local to host zwj) UUID : 27119caa:5e2deb6e:427c4d7f:536669ca Events : 20 Number Major Minor RaidDevice State 252 28 0 active sync /dev/vdb12 252 31 1 spare rebuilding /dev/vdb15 #正在自动重建RAID5 252 30 2 active sync /dev/vdb14 252 29 - faulty /dev/vdb13 #显示循坏的/dev/vdb13 [root@zwj raid5]# mdadm -D /dev/md5 #检查是否重建完毕 /dev/md5: Version : 1.2 Creation Time : Sun May 7 21:26:28 2017 Raid Level : raid5 Array Size : 407552 (398.00 MiB 417.33 MB) Used Dev Size : 203776 (199.00 MiB 208.67 MB) Raid Devices : 3 Total Devices : 4 Persistence : Superblock is persistent Update Time : Sun May 7 21:37:47 2017 State : clean Active Devices : 3 Working Devices : 3 Failed Devices : 1 Spare Devices : 0 #没有备用的了 Layout : left-symmetric Chunk Size : 512K Name : zwj:5 (local to host zwj) UUID : 27119caa:5e2deb6e:427c4d7f:536669ca Events : 39 Number Major Minor RaidDevice State 252 28 0 active sync /dev/vdb12 252 31 1 active sync /dev/vdb15 252 30 2 active sync /dev/vdb14 252 29 - faulty /dev/vdb13 [root@zwj raid5]# cat /proc/mdstat Personalities : [raid0] [raid10] [raid6] [raid5] [raid4] md5 : active raid5 vdb14[4] vdb15[3] vdb13[1](F) vdb12[0] blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU] unused devices: <none> [root@zwj raid5]# mdadm --manage /dev/md5 --remove /dev/vdb13 --add /dev/vdb13;mdadm -D /dev/md5 #移除损坏的/dev/vdb13,并加入新的分区(这里还是/dev/vdb13) mdadm: hot removed /dev/vdb13 from /dev/md5 mdadm: added /dev/vdb13 /dev/md5: Version : 1.2 Creation Time : Sun May 7 21:26:28 2017 Raid Level : raid5 Array Size : 407552 (398.00 MiB 417.33 MB) Used Dev Size : 203776 (199.00 MiB 208.67 MB) Raid Devices : 3 Total Devices : 4 Persistence : Superblock is persistent Update Time : Sun May 7 21:40:04 2017 State : clean Active Devices : 3 Working Devices : 4 Failed Devices : 0 Spare Devices : 1 Layout : left-symmetric Chunk Size : 512K Name : zwj:5 (local to host zwj) UUID : 27119caa:5e2deb6e:427c4d7f:536669ca Events : 41 Number Major Minor RaidDevice State 252 28 0 active sync /dev/vdb12 252 31 1 active sync /dev/vdb15 252 30 2 active sync /dev/vdb14 252 29 - spare /dev/vdb13 [root@zwj raid5]# df -h Filesystem Size Used Avail Use% Mounted on /dev/vda1 20G 13G 5.9G 69% / /dev/vdb1 20G 936M 18G 5% /mydata /dev/md5 386M 61M 306M 17% /mnt/raid5 |